Introducción a la Computación Científica y de Alto Rendimiento

Table of Contents

- 1. Resources

- 2. Introduccion a la programacion en C++

- 3. Errors in numerical computation: Floating point numbers

- 4. Makefiles

- 5. Standard library of functions - Random numbers

- 6. Workshop: How to install programs from source

- 7. Debugging

- 8. Unit Testing : Ensuring fix and correct behavior last

- 9. Profiling

- 10. Optimization

- 11. Numerical libraries in Matrix Problems

- 12. Performance measurement for some matrix ops

- 13. Introduction to High Performance Computing

- 13.1. Reference Materials

- 13.2. Introduction

- 13.3. Basics of parallel metrics

- 13.4. Practical overview of a cluster resources and use

- 13.5. Openmp, shared memory

- 13.6. MPI, distributed memory

- 13.7. Simple parallelization: farm task and gnu parallel or xargs

- 13.8. Threads from c++11

- 13.9. Parallel algorithms in c++

- 13.10. TODO Gpu Programming intro

- 14. Introduction to OpenMp (Shared memory)

- 15. Introduction to MPI (distributed memory)

- 15.1. Intro MPI

- 15.2. Core MPI

- 15.3. Application: Numerical Integration (Send and Recv functions)

- 15.4. More Point to point exercises

- 15.5. Collective Communications

- 15.6. More examples :

MPI_Gather,MPI_Scatter - 15.7. Collective comms exercises

- 15.8. Practical example: Parallel Relaxation method

- 15.9. Debugging in parallel

- 15.10. More Exercises

- 16. HPC resource manager: Slurm

- 17. Complementary tools

- 17.1. Emacs Resources

- 17.2. Herramientas complementarias de aprendizaje de c++

- 17.3. Slackware package manager

- 17.4. Taller introductorio a git

- 17.4.1. Asciicast and Videos

- 17.4.2. Introducción

- 17.4.3. Creando un repositorio vacío

- 17.4.4. Configurando git

- 17.4.5. Creando el primer archivo y colocandolo en el repositorio

- 17.4.6. Ignorando archivos : Archivo

.gitignore - 17.4.7. Configurando el proxy en la Universidad

- 17.4.8. Sincronizando con un repositorio remoto

- 17.4.9. Consultando el historial

- 17.4.10. Otros tópicos útiles

- 17.4.11. Práctica online

- 17.4.12. Enviando una tarea

- 17.4.13. Ejercicio

- 17.5. Plot the data in gnuplot and experts to pdf/svg

- 17.6. Plot the data in matplotlib

- 17.7. Virtualbox machine setting up

- 18. Bibliografía

1. Resources

1.1. Presentations for the whole course

1.2. Videos recorded from classes

1.3. Shell

1.4. git

1.5. Ide’s, g++ tools [5/5]

1.5.1. Resources

1.5.2. Intro

[X]Rename Executable

g++ helloworld.cpp -o helloworld.x ./helloworld.x[X]Check some ide- Codeblocks

- Geany

- Clion

- Windows only:

- Devc++

- Visual Studio

- …

[X]Compilation stages[X]Pre-processor This does not compile. It just executes the precompiler directives. For instance, it replaces the

iostreamheader and put it into the source codeg++ -E helloworld.cpp # This instruction counts how many lines my code has now g++ -E helloworld.cpp | wc -l

[X]Write file

./hello.x > archivo.txt

[X]Read keyboard

2. Introduccion a la programacion en C++

2.1. Program to compute the mean of a vector + git repo

#include <iostream> #include <vector> #include <cmath> #include <cstdlib> #include <algorithm> #include <numeric> // function declaration void fill(std::vector<double> & xdata); // llenar el vector double average(const std::vector<double> & xdata); // calcular el promedio int main(int argc, char **argv) { // read command line args int N = std::atoi(argv[1]); // declare the data struct std::vector<double> data; data.resize(N); // fill the vector fill(data); // compute the mean double result = average(data); // print the result std::cout.precision(15); std::cout.setf(std::ios::scientific); std::cout << result << "\n"; return 0; } // function implementation void fill(std::vector<double> & xdata) { std::iota(xdata.begin(), xdata.end(), 1.0); // 1.0 2.0 3.0 } double average(const std::vector<double> & xdata) { // forma 1 return std::accumulate(xdata.begin(), xdata.end(), 0.0)/xdata.size(); // forma 2 // double suma = 0.0; // for (auto x : xdata) { // suma += x; // } // return suma/data.size(); }

2.2. Plantilla base

1: // Plantilla de programas de c++ 2: 3: #include <iostream> 4: using namespace std; 5: 6: int main(void) 7: { 8: 9: 10: return 0; 11: }

2.3. Compilation

- We use g++, the gnu c++ compiler

- The most basic form to use it is :

g++ filename.cpp

If the compilation is successful, this produces a file called

a.out on the current directory. You can execute it as

./a.out

where ./ means “the current directory”. If you want to change the

name of the executable, you can compile as

g++ filename.cpp -o executablename.x

Replace executablename.x with the name you want for your

executable.

2.4. Hola Mundo

1: // Mi primer programa 2: 3: #include <iostream> 4: using namespace std; 5: 6: int main(void) 7: { 8: 9: cout << "Hola Mundo!" << endl; 10: 11: return 0; 12: } 13:

2.5. Entrada y salida

1: #include <iostream> 2: using namespace std; 3: 4: int main(void) 5: { 6: //cout << "Hola mundo de cout" << endl; // sal estandar 7: //clog << "Hola mundo de clog" << endl; // error estandar 8: 9: int edad; 10: cout << "Por favor escriba su edad y presione enter: "; 11: cout << endl; 12: cin >> edad; 13: cout << "Usted tiene " << edad << " anhos" << endl; 14: 15: // si su edad es mayor o igual a 18, imprimir 16: // usted puede votar 17: // si no, imprimir usted no puede votar 18: 19: return 0; 20: } 21:

1: // Mi primer programa 2: 3: #include <iostream> 4: using namespace std; 5: 6: int main(void) 7: { 8: char name[150]; 9: cout << "Hola Mundo!" << endl; 10: cin >> name; 11: cout << name; 12: 13: return 0; 14: } 15:

2.6. Loops

1: 2: // imprima los numeros del 1 al 10 suando while 3: 4: #include <iostream> 5: using namespace std; 6: 7: int main(void) 8: { 9: int n; 10: 11: n = 1; 12: while (n <= 10) { 13: cout << n << endl; 14: ++n; 15: } 16: 17: return 0; 18: }

#+RESULTS

2.7. Condicionales

1: // verificar si un numero es par 2: 3: /* 4: if (condicion) { 5: instrucciones 6: } 7: else { 8: instrucciones 9: } 10: */ 11: 12: #include <iostream> 13: using namespace std; 14: 15: int main(void) 16: { 17: int num; 18: 19: // solicitar el numero 20: cout << "Escriba un numero entero, por favor:" << endl; 21: // leer el numero 22: cin >> num; 23: 24: // verificar que el numero es par o no 25: // imprimir 26: // si el numero es par, imprimir "el numero es par" 27: // si no, imprimir "el numero es impar" 28: if (num%2 == 0) { 29: cout << "El numero es par" << endl; 30: } 31: if (num%2 != 0) { 32: cout << "El numero es impar" << endl; 33: } 34: 35: //else { 36: //cout << "El numero es impar" << endl; 37: //} 38: 39: return 0; 40: } 41:

3. Errors in numerical computation: Floating point numbers

3.1. Overflow for integers

We will add 1 until some error occurs

#include <cstdio> int main(void) { int a = 1; while(a > 0) { a *= 2 ; std::printf("%10d\n", a); } }

3.2. Underflow and overflow for floats

#include <iostream> #include <cstdlib> typedef float REAL; int main(int argc, char **argv) { std::cout.precision(16); std::cout.setf(std::ios::scientific); int N = std::atoi(argv[1]); REAL under = 1.0, over = 1.0; for (int ii = 0; ii < N; ++ii){ under /= 2.0; over *= 2.0; std::cout << ii << "\t" << under << "\t" << over << "\n"; } }

3.2.1. Results

| Type | Under | Over |

|---|---|---|

| float | 150 | 128 |

| double | 1074 | 1023 |

| long double | 16446 | 16384 |

3.3. Test for associativity

#include <cstdio> int main(void) { float x = -1.5e38, y = 1.5e38, z = 1; printf("%16.6f\t%16.6f\n", x + (y+z), (x+y) + z); printf("%16.16f\n", 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1 + 0.1); return 0; }

3.4. Machine eps

#include <iostream> #include <cstdlib> typedef long double REAL; int main(int argc, char **argv) { std::cout.precision(20); std::cout.setf(std::ios::scientific); int N = std::atoi(argv[1]); REAL eps = 1.0, one = 0.0; for (int ii = 0; ii < N; ++ii){ eps /= 2.0; one = 1.0 + eps; std::cout << ii << "\t" << one << "\t" << eps << "\n"; } }

3.4.1. Results

| Type | eps |

|---|---|

| float | 7.6e-6 |

| double | 1.1e-16 |

| long double | 1.0e-19 |

3.5. Exponential approximation

#include <iostream> #include <cmath> int factorial(int n); double fnaive(double x, int N); double fsmart(double x, int N); int main(void) { std::cout.precision(16); std::cout.setf(std::ios::scientific); double x = 1.234534534; for (int NMAX = 0; NMAX <= 100; ++NMAX) { std::cout << NMAX << "\t" << fnaive(x, NMAX) << "\t" << std::fabs(fnaive(x, NMAX) - std::exp(-x))/std::exp(-x) << "\t" << fsmart(x, NMAX) << "\t" << std::fabs(fsmart(x, NMAX) - std::exp(-x))/std::exp(-x) << std::endl; } return 0; } double fnaive(double x, int N) { double term = 0, suma = 0; for(int k = 0; k <= N; ++k){ term = std::pow(-x, k)/factorial(k); suma += term; } return suma; } int factorial(int n) { if (n <= 0) return 1; return n*factorial(n-1); } double fsmart(double x, int N) { double term = 1, suma = 1; for(int k = 0; k < N; ++k){ term *= (-x)/(k+1); suma += term; } return suma; }

3.6. Exercise: Substractive cancellation with the Quadratic equation

3.7. Exercise: Sum ups and down

Is there any computation difference between the following sums?

\begin{align} S_{up}(N) &= \sum_{n=1}^{N} \frac{1}{n},\\ S_{down}(N) &= \sum_{n=N}^{1} \frac{1}{n}. \end{align}Implement and plot the relative difference among them as a function of \(N\).

3.8. Total errros

(Check presentation for a practical example)

3.9. How to minimize numerical errors?

- Use numerical libraries: lapack, eigen, standard c++ lib (https://en.cppreference.com/w/, https://hackingcpp.com/cpp/cheat_sheets.html)

- Analize extreme cases, try to rewrite the expressions: http://herbie.uwplse.org/

- Minimize the use of substractions of similar numbers, operations between very large and/or very small numbers, normalize your models

- Some interesting refs:

4. Makefiles

4.1. What is a Makefile

Note: For more information, please see:

- Gnu make tutorial

- Software Carpentry Tutorial .

- https://kbroman.org/minimal_make/

- https://swcarpentry.github.io/make-novice/

- First linux version: https://elixir.bootlin.com/linux/0.01/source/kernel/Makefile (more info at https://lwn.net/SubscriberLink/928581/841b747332791ac4/)

A makefile allows you to atomatize the compilation process by following given rules, and for large projects this could actually speed up significantly the compilation process. You can also use a Makefile for latex projects and other related task where you want to reload and create automatically an updated version of a document when you have updated a small part. A rule in a makefile is written as

target : dependencies separated by space rule to build target #sumupdown.x : sumupdown.cpp # g++ sumupdown.cpp -o sumupdown.x

It is important to note that the beginning of the rule is indented with real tab, not with spaces.

4.2. Application example: Figure creation

Imagine that you want to automatize the creation of the figure of

the sumup and sumdown exercise from the numerical errors section. In this case

you should think about the dependencies: the latest figure, sumupdown.pdf will

depend on the a given gnuplot (or python) script (plot.gp) that read some data

from a given file (data.txt), so any change on those files should trigger and

update on the figure. Furhtermore, the data file will actually depend on the

source (sumupdown.cpp) and its related executable (sumupdown.x), since any

change there may imply new data. And actually the executable depends directly on

the source code. We could express this dependencies as

fig.pdf: script.gp data.txt gnuplot script.gp data.txt: sumupdown.x ./sumupdown.x > data.txt sumupdown.x: sumupdown.cpp g++ sumupdown.cpp -o sumupdown.x

sumupdown.pdf: datos.txt script.gp

gnuplot script.gp

The actual order is not important. Every time you run make it will check if any target is outdated and run the command needed. The follwing code shows a simple solution for the sum up and down

#include <iostream> #include <cmath> float sumup(int nterms); float sumdown(int nterms); int main(int argc, char **argv) { std::cout.precision(6); std::cout.setf(std::ios::scientific); for (int ii = 1; ii <= 10000; ++ii) { double sum1 = sumup(ii); double sum2 = sumdown(ii); std::cout << ii << "\t" << sum1 << "\t" << sum2 << "\t" << std::fabs(sum1-sum2)/sum2 << "\n"; } return 0; } float sumup(int nterms) { float result = 0; for(int n = 1; n <= nterms; n++) { result += 1.0/n; } return result; } float sumdown(int nterms) { float result = 0; for(int n = nterms; n >= 1; n--) { result += 1.0/n; } return result; }

And this is a simple gnuplot script example

set term pdf set out "sumupdown.pdf" # set xlabel "Nterms" # set ylabel "Percentual difference" plot 'data.txt' u 1:4 w lp pt 4

Now just run make and see what happens. Then uncomment, for example, the

commented lines inside the gnuplot script and run make again. Check that make

just reruns the needed rule, it does not recompile the whole program.

Actually, the makefile can be simplified and generalized using variables

fig.pdf: script.gp data.txt gnuplot $^ data.txt: sumupdown.x ./$^ > $@ %.x: %.cpp g++ $^ -o $@

4.3. Some modern tools

just: https://github.com/casey/just, https://cheatography.com/linux-china/cheat-sheets/justfile/cmake: https://cmake.org/, https://crascit.com/professional-cmake/xmake: https://xmake.io/#/ninja, bazel, ...

4.4. Application example: many files to compile

For this example let’s assume that you have two functions, foo and bar, that

you want to use repeatedly on several projects so you have moved them to their

corresponding header and implementations files as

// file foo.h #include <iostream> void foo(void);

// file foo.cpp #include "foo.h" void foo(void){ std::cout << "Inside foo\n"; }

// file bar.h #include <iostream> void bar(void);

// file bar.cpp #include "bar.h" void bar(void){ std::cout << "Inside bar\n"; }

and the main file,

#include "foo.h" #include "bar.h" int main(void) { foo(); bar(); return 0; }

If you want to compile these files, you need to run something like

# this creates the object foo.o g++ -c foo.cpp # this creates the object bar.o g++ -c bar.cpp # this links with main and creates executable main.x g++ main.cpp foo.o bar.o -o main.x

Of course, all this process can be automatized in a single shell

script but, if you change only foo.cpp, the shell script will run

again all the commands, so many non needed compilations are

performed. A makefile optimizes this process. Let’s write our first

makefile for the problem at hand:

main.x : foo.o bar.o

g++ -o main.x foo.o bar.o main.cpp

Here you see a special structure : a target name (main.x), a

pre-requisites list (~foo.o and bar.o), and the rule to make the

target. The rule MUST be indented with real tab. Save the file as

makefile-v1 and run the command

make -f makefile-v1

and you will see that make will create the objects and the executable. This is great! Even more, the objects are created automatically. These is done through something called automatic rules.

Now change a little bit the file foo.cpp, add some new printing,

and re-run make as before. What do you see? Now make is

recompiling only the object which has changed, not all of them, so

the re-compilation is much faster!.

After seeing this advantages, let’s try to generalize and use more useful syntax for make. Fir instance, you can specify variables, which can be overridden on the command line. Something like

CXX=g++ CXXFLAGS=-I. main.x: foo.o bar.o $(CXX) $(CXXFLAGS) -o main.x main.cpp foo.o bar.o

Compile again and test. Everything should work.

Now, we will specify a generic rule to create the .o objects, and

also the name of the objects in a variable. Furthermore, we use $@

to specify the name of the target, and $^ which symbolizes all the

items in the dependency list

CXX = g++ CXXFLAGS = -I. OBJ = foo.o bar.o main.x: $(OBJ) $(CXX) $(CXXFLAGS) -o $@ $^ main.cpp

Save and run it, you will still get the same but the makefile is becoming more generic, and therefore more useful.

Now let’s specify the generic rule to create the .o files, and

also specify that if we change the .h files then a recompilation

is needed (for now our makefile only detect changes in .cpp

files):

CXX = g++ CXXFLAGS = -I. OBJ = foo.o bar.o DEPS = foo.h bar.h %.o: %.cpp $(DEPS) $(CXX) -c -o $@ $< $(CXXFLAGS) main.x: $(OBJ) $(CXX) $(CXXFLAGS) -o $@ $^ main.cpp

#+RESULTS

Where we have specify the dependency on DEPS, and also we are

using a generic rule to tell how to create any .o file

(exemplified with the generic %.o symbol), from any corresponding

%.c file, and $< means the first item on the deps list. Again,

save it and run it.

You can rules to clean the directory (to erase object files, for example), to add libs, to put the headers on another directory, etc. For example, the following adds a phony rule (which does not create any target) to clean the directory

CXX = g++ CXXFLAGS = -I. OBJ = foo.o bar.o DEPS = foo.h bar.h %.o: %.cpp $(DEPS) $(CXX) -c -o $@ $< $(CXXFLAGS) main.x: $(OBJ) $(CXX) $(CXXFLAGS) -o $@ $^ main.cpp .PHONY: clean clean: rm -f *.o *~ *.x

In order to run this rule, you need to write

make -f makefile-v5 clean

And, finally, a more complete example with comments and new syntax, which you can adapt for your own needs

CXX = g++ CXXFLAGS = -I. LDFLAGS = SOURCES = main.cpp foo.cpp bar.cpp OBJ = $(SOURCES:.cpp=.o) # extracts automatically the objects names DEPS = foo.h bar.h all : main.x $(SOURCES) $(DEPS) # this is the default target main.x: $(OBJ) @echo "Creating main executable ..." $(CXX) $(CXXFLAGS) -o $@ $^ $(LDFLAGS) .cpp.o: $(CXX) -c -o $@ $< $(CXXFLAGS) .PHONY: clean clean: rm -f *.o *~ *.x

4.5. Exercise

- Take the codes for overflow and underflow and automatize the detection of

overflow with the standard function

isinf. - When they are detecting overflow automatically, stopping and printing where

the overflow occurs, put them in separated files (

.hand.cpp). - Then create a main file which calls those functions.

- Now write a makefile to automatize the compilation. Use the latest version of the example Makefile.

- When everything is working send it to assigned repository.

- Do the same now including the code which computes the machine eps, using its

own

.hand.cpp. - If you use latex, how will you write a Makefile for that? Well, at the end

you can use

latexmk, of course. Try to think on other uses for make (like automatic zipping and mailing of some important file, )

5. Standard library of functions - Random numbers

5.1. Containers

5.1.1. Vector

5.1.2. Map

5.1.3. Set

5.2. Algorithms

5.3. Special functions

5.3.1. Gamma function

See http://en.cppreference.com/w/cpp/numeric/math/tgamma

#include <cstdio> #include <cmath> int main(void) { const double XMIN = 0.0; const double XMAX = 11.0; const double DX = 0.1; const int NSTEPS = int((XMAX-XMIN)/DX); for(int ii = 0; ii < NSTEPS; ++ii) { double x = XMIN + ii*DX; printf("%25.16e%25.16e\n", x, std::tgamma(x)); } return 0; }

5.3.2. Beta function

// requires c++17 #include <cstdio> #include <cmath> int main(void) { const double XMIN = -3.0; const double XMAX = 3.0; const double YMIN = -3.0; const double YMAX = 3.0; const double DELTA = 0.01; const int NSTEPS = int((XMAX-XMIN)/DELTA); for(int ii = 0; ii < NSTEPS; ++ii) { double x = XMIN + ii*DELTA; for(int jj = 0; jj < NSTEPS; ++jj) { double y = YMIN + jj*DELTA; printf("%25.16e%25.16e%25.16e\n", x, y, std::beta(x, y)); } printf("\n"); } return 0; }

5.4. Random numbers

5.4.1. Uniform distribution with random seed

#include <random> #include <iostream> int main(void) { //std::random_device rd; // inicializacion con semilla aleatoria //std::mt19937 gen(rd()); // genera bits aleatorios std::mt19937 gen(10); std::uniform_real_distribution<> dis(1, 2); // distribucion for(int n = 0; n < 10; ++n) { std::cout << dis(gen) << std::endl; } }

5.4.2. Uniform distribution controlled seed

#include <random> #include <iostream> #include <cstdlib> int main(int argc, char **argv) { int seed = std::atoi(argv[1]); std::mt19937 gen(seed); std::uniform_real_distribution<double> dis(1, 2); for(int n = 0; n < 100000; ++n) { std::cout << dis(gen) << std::endl; } return 0; }

5.4.3. Normal distribution controlled seed

#include <random> #include <iostream> int main(void) { int seed = 1; std::mt19937 gen(seed); std::normal_distribution<> dis{1.5, 0.3}; for(int n = 0; n < 100000; ++n) { std::cout << dis(gen) << std::endl; } }

5.4.4. Homework

Create an histogram to compute the pdf and use it to test the random numbers produced by several distributions.

6. Workshop: How to install programs from source

In this workshop we will learn how to install a program or library from source to the home directory of the user. This is useful when you need a programa but it is not available in the system you are working on. Also, It could be that you actually need a newer version than the installed one. We will use the following programs, some of them already available at the computer room:

| Name | installed version | latest version |

|---|---|---|

| fftw | 3.3.4 | 3.3.8 |

| Eigen C++ | 3.2.7 | 3.3.7 |

| voro++ | Not installed | 0.4.6 |

| g++ 9.x | Not installed | 9.2 |

We will learn how to do it by compiling a package directly, something very useful to know, and also using a new tool aimed for supercomputers, called spack , that simplifies the installation of software and also allows to have many versions of the same library, something not easy done manually.

6.1. Preliminary concepts

It is important for you to know a little how your operating system

and the shell look for commands. In general, the PATH variable

stores the directories where the shell interpreter will look for a

given command. To check its contents, you can run the following,

echo $PATH

If, for instance, you want to add another directory to the PATH,

like the directory $HOME/local/bin, you can run the following

export PATH=$PATH:$HOME/bin

This appends the special directory to the old content of the

PATH.

When you want to compile a given program that uses some other

libraries, you must specify any extra folder to look for include

files, done with the -I flag, and for libraries, done with the

-L and -l flags. For instance, let’s assume that you installed some

programs in your HOME/local, the include files inside $HOME/local/include,

and the libraries inside $HOME/local/lib. If you want to tell the

compiler to use those, you must compile as

g++ -I $HOME/local/include -L $HOME/local/lib filename.cpp -llibname

Finally, whenever you are installing a program from source you must be aware that this reduces to basically the following steps:

- Download and untar the file. Enter the unpacked directory.

- Read the README/INSTALL files for any important info.

If the program uses cmake, create a build dir and then use cmake to generate the Makefiles:

mkdir build cd build cmake ../ -DCMAKE_INSTALL_PREFIX=$HOME/local

On the other hand, if the program uses

configure, then configure the system to install on the required path./configure --prefix=$HOME/localCompile and install the program, maybe using many threads

make -j 4 # uses for threads to compile make install

Done. The program is installed.

Finally, when compiling, do not forget to use the flags

-Land-Iappropriately.

Setting all the flags and making sure to use the right version is

sometimes difficult, so tools like spack aim to manage and

simplify this.

6.2. Checking the version for already installed programs

If you are used to apt-get or something related, you can use the

package manager to check. But, in general, you can check the

versions by looking at the appropriate places on your

system. Typically, if you are looking for a library, they are

installed under /usr/lib or /usr/local/lib, while include files

are installed under /usr/include or /usr/local/include

. For instance, if you are looking for library foo, then you should

look for the file libfoo.a or libfoo.so . One useful utility

for this is the command locate or find .

locate libfoo find /usr/lib -iname "*libfoo*"

Execute these commands to check the actual versions for fftw, eigen, and git. What versions do you have? If you a re looking for a program, or an specific version of program, you must check if the program exists by executing it. For command line programs you usually can check the version by using the following

programname --version

where programname is the name of the command.

6.3. Preparing the local places to install the utilities

In this case we will install everything under the $HOME/local

subdirectory inside your home, so please create it. Remember that

the symbol $HOME means your home directory. The utilies will then

create the appropriate folders there. NOTE: Better use the $HOME

var instead of ~, which is another way to state the name of your

home.

6.4. Typical installation algorithm

- Download the source from the project page. This normally implies

downloading a tarball (file ending with

.tar.gzor.tar.bz2) . Un-compress the downloaded file. For a tarball, the command will be

tar xf filename.tar.gz

- Enter to the newly uncompressed folder (almost always usually

cd filename). - READ the

READMEand/or theINSTALLfile to check for important info regarding the program. SOmetimes these files tell you that the installation is special and that you are required to follow some special steps (that will happen withvoro++) CONFIGURATION: You have two options, each one independent on the other:

- If the program has a configure script, then just run

./configure --help

to check all the available options. Since we want to install on the

$HOME/localdirectory, then we need to run./configure --prefix=$HOME/localIf you don’t specify the prefix, then the program will be installed on the

/usr/binor/usr/local/bindirectories, whatever is the default. If these commands ends successfully, it will print some info to the screen and will tell you to go to the next step. Otherwise you will need to read the log and fix the errors (like installing a dependency).- If the program uses

cmake, a makefile generator and

configurator, then you need to do the following:

mkdir build # creates a build directory to put there the temporary built files cd build cmake ../ -DCMAKE_INSTALL_PREFIX:PATH=$HOME/local # configure the building process for the source code located on the parent directory

COMPILATION: Now that you have configured your installation, you need to compile by using the GNU make utility (Note: All this build utilities come from the gnu organization and are free software as in freedom). If you have several cores, you can use them in parallel, assuming the that the Makefile and your make versions supports it:

make -j 3 # for three cores, but, if you are unsure, just use one core.

Any errors in this stage should be fixed before going to the next one.

INSTALLATION After successful compilation, you can install by using

make installThis will install the program (libraries, binaries, include files, manual files, etc) onto the

prefixdirectory. If you want to instll system-wide (you did not set theprefix), then you need to usesudo make install. In this case you don’t needsudosince you are installing on your own home.TESTING In this case use a program to test your installation. When you compile your program and you want to use the version that you installed, you need to tell the compiler where to find the libraries/includes, so you need something like

g++ -L $HOME/local/lib -I $HOME/local/include programname.cpp -llibname

-L $HOME/local/libtells the compiler to look for libraries on

the

$HOME/local/libdirectory.-I $HOME/local/includetells the compiler to look for include

files on the

$HOME/local/includedirectory.-llibnametells the compiler to link with the given

library. Sometimes is not needed. Sometimes is crucial. Be careful, if your library is called libfftw, you need to write

-lfftw, not-llibfftw.

6.5. Workshop

For each of the proposed utilities written at the beginning, follow the next procedure:

- Check the installed version number and compare with the latest one.

- Install the latest version on your home directory by following the procedure stated above.

- Run each of the following example program but make sure you a re using you installed version. Show to the instructor the compilation line.

Important NOTE: for g++, use the prefix -9 in the configure

line to put that as suffix to the commands and avoid collisions with the

compiler already installed in the system. This can be done by

adding the flag --program-suffix=-9 to the configure command.

6.6. Test Programs

6.6.1. fftw

This is a c code. Save it as testfftw.c and compile with gcc

instead of g++ .

// From : https://github.com/undees/fftw-example // This ia a c code (save it as testfftw.c) /* Start reading here */ #include <fftw3.h> #define NUM_POINTS 128 /* Never mind this bit */ #include <stdio.h> #include <math.h> #define REAL 0 #define IMAG 1 void acquire_from_somewhere(fftw_complex* signal) { /* Generate two sine waves of different frequencies and * amplitudes. */ int i; for (i = 0; i < NUM_POINTS; ++i) { double theta = (double)i / (double)NUM_POINTS * M_PI; signal[i][REAL] = 1.0 * cos(10.0 * theta) + 0.5 * cos(25.0 * theta); signal[i][IMAG] = 1.0 * sin(10.0 * theta) + 0.5 * sin(25.0 * theta); } } void do_something_with(fftw_complex* result) { int i; for (i = 0; i < NUM_POINTS; ++i) { double mag = sqrt(result[i][REAL] * result[i][REAL] + result[i][IMAG] * result[i][IMAG]); printf("%g\n", mag); } } /* Resume reading here */ int main() { fftw_complex signal[NUM_POINTS]; fftw_complex result[NUM_POINTS]; fftw_plan plan = fftw_plan_dft_1d(NUM_POINTS, signal, result, FFTW_FORWARD, FFTW_ESTIMATE); acquire_from_somewhere(signal); fftw_execute(plan); do_something_with(result); fftw_destroy_plan(plan); return 0; }

6.6.2. Eigen C++

These are C++ codes. Save them, compile, run and explain what

they do.

#include <iostream> #include <Eigen/Dense> #include <Eigen/Core> using Eigen::MatrixXd; int main() { //std::cout << EIGEN_MAYOR_VERSION << std::endl; std::cout << EIGEN_MINOR_VERSION << std::endl; MatrixXd m(2,2); m(0,0) = 3; m(1,0) = 2.5; m(0,1) = -1; m(1,1) = m(1,0) + m(0,1); std::cout << m << std::endl; }

#include <iostream> #include <Eigen/Dense> using namespace Eigen; int main() { Matrix2d a; a << 1, 2, 3, 4; MatrixXd b(2,2); b << 2, 3, 1, 4; std::cout << "a + b =\n" << a + b << std::endl; std::cout << "a - b =\n" << a - b << std::endl; std::cout << "Doing a += b;" << std::endl; a += b; std::cout << "Now a =\n" << a << std::endl; Vector3d v(1,2,3); Vector3d w(1,0,0); std::cout << "-v + w - v =\n" << -v + w - v << std::endl; }

#include <iostream> #include <Eigen/Dense> using namespace std; using namespace Eigen; int main() { Matrix3f A; Vector3f b; A << 1,2,3, 4,5,6, 7,8,10; b << 3, 3, 4; cout << "Here is the matrix A:\n" << A << endl; cout << "Here is the vector b:\n" << b << endl; Vector3f x = A.colPivHouseholderQr().solve(b); cout << "The solution is:\n" << x << endl; }

#include <iostream> #include <Eigen/Dense> using namespace std; using namespace Eigen; int main() { Matrix2f A; A << 1, 2, 2, 3; cout << "Here is the matrix A:\n" << A << endl; SelfAdjointEigenSolver<Matrix2f> eigensolver(A); if (eigensolver.info() != Success) abort(); cout << "The eigenvalues of A are:\n" << eigensolver.eigenvalues() << endl; cout << "Here's a matrix whose columns are eigenvectors of A \n" << "corresponding to these eigenvalues:\n" << eigensolver.eigenvectors() << endl; }

6.6.3. Voro++

Use the example http://math.lbl.gov/voro++/examples/randompoints/

// Voronoi calculation example code // // Author : Chris H. Rycroft (LBL / UC Berkeley) // Email : chr@alum.mit.edu // Date : August 30th 2011 #include "voro++.hh" using namespace voro; // Set up constants for the container geometry const double x_min=-1,x_max=1; const double y_min=-1,y_max=1; const double z_min=-1,z_max=1; const double cvol=(x_max-x_min)*(y_max-y_min)*(x_max-x_min); // Set up the number of blocks that the container is divided into const int n_x=6,n_y=6,n_z=6; // Set the number of particles that are going to be randomly introduced const int particles=20; // This function returns a random double between 0 and 1 double rnd() {return double(rand())/RAND_MAX;} int main() { int i; double x,y,z; // Create a container with the geometry given above, and make it // non-periodic in each of the three coordinates. Allocate space for // eight particles within each computational block container con(x_min,x_max,y_min,y_max,z_min,z_max,n_x,n_y,n_z, false,false,false,8); // Randomly add particles into the container for(i=0;i<particles;i++) { x=x_min+rnd()*(x_max-x_min); y=y_min+rnd()*(y_max-y_min); z=z_min+rnd()*(z_max-z_min); con.put(i,x,y,z); } // Sum up the volumes, and check that this matches the container volume double vvol=con.sum_cell_volumes(); printf("Container volume : %g\n" "Voronoi volume : %g\n" "Difference : %g\n",cvol,vvol,vvol-cvol); // Output the particle positions in gnuplot format con.draw_particles("random_points_p.gnu"); // Output the Voronoi cells in gnuplot format con.draw_cells_gnuplot("random_points_v.gnu"); }

On gnuplot do the following:

splot "random_points_p.gnu" u 2:3:4, "random_points_v.gnu" with lines

6.6.4. g++ 9.2

Just run the command and check the version,

$HOME/local/bin/g++-9 --version

Now run any of the special functions examples that required

-std=c++17 .

6.7. Spack: the modern solution

Spack was designed by llnl and is targeted to simplify the installation and managment of HPC programs with many possible versions and dependencies. Check the docs at https://spack.readthedocs.io/en/latest/tutorial.html or https://bluewaters.ncsa.illinois.edu/webinars/software-ecosystems/spack . For now let’s just install it, configure it, and install some simple tools.

6.7.1. Installation

Following the official docs, just clone the repository

git clone https://github.com/spack/spack.git # clones the repo cd spack #git checkout releases/v0.17 # Checkout the latest stable release source share/spack/setup-env.sh # Setup the environment , this command should go in ~/.bashrc

Now you can check what can be installed

spack list

To be able to use spack easily in the future, it is recommended to add the

source command to your ~/.bashrc, so just add the following at the end of the file

source $HOME/PATHTOREPO/share/spack/setup-env.sh

and then close and open the terminal.

In our computer room there has been a problem with spack and openssl. It is

better to instruct spack to use the local openssl version instead of building

one. To do so, add the following to your spack package config file, $SPACK_REPO/etc/spack/packages.yaml:

packages: openssl: externals: - spec: openssl@1.1.1m prefix: /usr buildable: False

You can check the correct version with \(openssl version\). Furthermore, to be

able to run your installed programs on several computers with different

processors, use the flag target=x86_64 .

6.7.2. Installing some tools with spack

Now let’s just install the gsl scientific library and some

eigen alternative versions:

spack info gsl # Get some information about versions, deps, etc spack install gsl@2.5 spack install gsl@2.4 module avail

To check the installed software, you can use the module command

as (installed when you used bootstrap)

module avail

Now you will see that you have two versions of the gsl. If you

want to use one of them, you will load it with spack. The check

the change in environment, first check the PATH, then load, then

compare

echo $PATH echo $C_INCLUDE_PATH echo $LD_LIBRARY_PATH

Now load the gsl version 2.5,

spack load gsl@2.5

and check the new paths

echo $PATH echo $C_INCLUDE_PATH echo $LD_LIBRARY_PATH

If you unload the gsl 2.5, everything goes back to normal,

spack unload gsl@2.5 echo $PATH echo $C_INCLUDE_PATH echo $LD_LIBRARY_PATH

To learn more about spack, check the official docs and tutorials. In the following we will use it to play with several packages in parallel programming. Is voro++ available? what about eigen?

7. Debugging

7.1. Example

Fix the following code

#include <iostream> #include <cstdlib> int main(int argc, char **argv) { // declare variables const int N = 10; double * array; //reserve memory array = new double [N]; // initialise array for (int ii = 0; ii < N; ++ii) { array[ii] = 2*(++ii); // != 2*(ii++) ? } // print some values int idx = 2; std::cout << array[idx] << std::endl; idx = 10; std::cout << array[idx] << std::endl; // compiles, but ... // free memory //delete [] array; return EXIT_SUCCESS; }

7.2. Sanitizers

Besides the typical printing to help debugging, a first helping hand would the compiler sanitizers. Try recompiling the previous code using the sanitizer flags as

g++ -fsanitize=address -fsanitize=leak -fsanitize=undefined source.cpp

and then run it

./a.out

Fo you observe something new? sanitizers check your code in runtime and help you find errors faster.

7.3. GDB (Gnu debugger)

7.3.1. How to use gdb?

Let’s use the following c++ code, called gdb_example_01.cpp:

#include <iostream> #include <cstdlib> int main(int argc, char **argv) { // declare variables const int N = 10; double * array; //reserve memory array = new double [N]; // initialise array for (int ii = 0; ii < N; ++ii) { array[ii] = 2*(++ii); // != 2*(ii++) ? } // print some values int idx = 2; std::cout << array[idx] << std::endl; idx = 10; std::cout << array[idx] << std::endl; // compiles, but ... // free memory delete [] array; return EXIT_SUCCESS; }

To compile for debugging, you should use

$ g++ -g -ggdb gdb_example_01.cpp -o gdb_example_01.x

The flag -g tells the compiler to generate additional debugging symbols to be

used by the debugger. In addition, you can also specify -ggdb to be more

specific about the debugger symbols you want (specific for gdb). Even more, you

can also specify the debug level by using -g3 (or -ggdb3). Default is 2.

Level 3 allows for debugging fo preprocessor macros.

Now, if you run your code

$ ./gdb_example_01.x

you will get the original output. The code does not have any error, and in principle it does not need debugging. But, you can still use the debugger to explore what your “correct” code is doing.

To attach gdb to your executable, run gdb as

$ gdb ./gdb_example_01.x

NOTE: gdb can also attach to already running process, by using the pid. For more info, see

man gdband maybeman ps.

This will bring to something similar to (this is for a Mountain Lion Mac os X machine)

GNU gdb (GDB) 7.5.1 Copyright (C) 2012 Free Software Foundation, Inc. License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html> This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Type "show copying" and "show warranty" for details. This GDB was configured as "x86_64-apple-darwin12.2.0". For bug reporting instructions, please see: <http://www.gnu.org/software/gdb/bugs/>. (gdb)

NOTE From now on, we will be inside the gdb console, so in the next examples please write the commands inside the gdb console. For example,

(gdb) stepmeans writestepinside the gdb console, and not(gdb) stepinside the shell.

Now, you are ready to go. First of all, keep in mind that your program is not yet running, it was just attached to gdb and all the debugging symbols were loaded. To actually run your program, you must write:

(gdb) run

NOTE: If your command needs command line arguments, you can run it as

(gdb) run [arglist], where[arglist]is the optional argument list.

And now you will get … guess … 0 again. Your program just ran up

to the end without any error. Now let’s get to the interesting part.

7.3.2. Breakpoints

A breakpoint is a point in your source code where you want to stop the execution in order to check whatever condition you want (the value of a variable, the change in a variable, etc), or, for example, to continue the execution by steps. We will set a breakpoint at line 5 of our source code. This is as simple as writting

(gdb) break 5

and now run again the executable

(gdb) run

As you can see, the code now runs up to line 5, where gdb inform us we have reached the breakpoint. If you check the source code, we are in the line

int a;

that is, we are just declaring the variable a. What is its value at

this point? 0? 1? Let’s check

(gdb) print a

The value should be poor memory garbage, like 0xff2345 or similar. It depends on the machine. Now, if we move one step to the next line in the execution order

(gdb) next

we will be in the line a = 0. Let’s check again the value of variable

a

(gdb) print a

it should now print 0. As we can see, we can check the value of a local variable. In general, we will be able to check the value of both local and global variables.

Other examples for setting breakpoints:

- Setting the break point at a given line of a given file:

(gdb) break gdb_example_XX.cpp:10

- Setting a break point when calling a function:

(gdb) break function_name

- Checking all breakpoints

(gdb) info breakpoints

- Deleting a break point (the second one)

(gdb) delete 2

- Ignore a given breakpoint (

3) for a number of times (100) (useful for iterations)

(gdb) ignore 3 100

- Conditional break :

(gdb) break gdb_example_XX.cpp:5 if localvar == 10

7.3.3. Execution Control

The instruction next tells gdb to advance in the execution order,

which also executes completely functions and returns to the main

function, while step goes inside the function step-by-step. Let’s

check the difference with another code (gdbexample02.cpp):

#include <iostream> void do_something(int & var); int main(int argc, char **argv) { int a; a = 0; std::cout << a << std::endl; do_something(a); std::cout << a << std::endl; return EXIT_SUCCESS; } void do_something(int & var) { int localvar = -1; var = 10.0; }

Compile it with debugging symbols

$ g++ -g -ggdb gdb_example_02.cpp -o gdb_example_02.x

and attach to gdb

$ gdb ./gdb_example_02.x

Let’s make a breakpoint at line 9 and then run the code

(gdb) break gdb_example_02.cpp:9 (gdb) run

Now advance step by step and explore the variables at local level. Try

to check the difference between next and step exactly at the

function call.

(gdb) step (gdb) step (gdb) next (gdb) ... (gdb) print localvar (gdb) print &localvar

You can also change the output format when printing a variable:

(gdb) p /d a (gdb) p /t a (gdb) p /x a

Add a break point at the function do_something. Compare the addresses

of the variables a and var inside the function do_something. Are

they the same or different? why?

Other control structures:

- Watch for a change on a given variable:

(gdb) watch foo

- Read-Watch for a given variable:

(gdb) rwatch foo

- Read-Write-Watch for a given variable:

(gdb) awatch foo

- Continue until next breakpoint:

(gdb) continue

- Finish current function:

(gdb) finish

7.3.4. Other useful gdb commands

(gdb) bt: Backtrace: display the program stack:(gdb) edit [file:]function: look at the program line where it is presently stopped.(gdb) help [name]: Show information about GDB command name, or general information about using GDB(gdb) quit: Exit from GDB.(gdb) list [file:]function: type the text of the program in the vicinity of where it is presently stopped.(gdb) call localfunction(): Calls a function calledlocalfunction()linked in the code.

7.3.5. Catching a segfault

Sometimes, when you are lazy or sloppy with the memory you use, or when

you just have bad luck (some strange problems), you could face the

infamous Segementation fault error. Let’s see how the debugger can

help us to catch a segfault and to fix it!

First, please compile the following code (gdb_example_segfault.cpp):

#!c++

#include <iostream>

#include <cstdlib>

int main(int argc, char **argv)

{

// declare variables

const int N = 10;

double * array;

//reserve memory

array = new double [N];

// initialise array

for (int ii = 0; ii < N; ++ii) {

array[ii] = 2*(++ii); // != 2*(ii++) ?

}

// print some values

int idx = 2;

std::cout << array[idx] << std::endl;

idx = 10;

std::cout << array[idx] << std::endl; // compiles, but ...

// free memory

delete [] array;

return EXIT_SUCCESS;

}

Now compile and run gdb on it:

$ g++ -g gdb_example_segfault.cpp -o gdb_example_segfault.x $ gdb ./gdb_example_segfault.x (gdb) run

What happens? it runs, but then you are facing the terrible segfault error. What is the cause? let’s use gdb:

(gdb) where

Now it tell us that the problem is on line XXXXX. If we check our source code, that is the line where we are accessing element with index 10 on an array whose maximum index is 9 (since its size is 10), and therefore we are out the fence. Now comment or remove that line, recompile, and rerun on gdb. Is there any error?

You can also check the value of the iteration variables or related to

track better the error. But where is many times enough to point you to

the problem.

You can also test the effect of other variable value, by using set:

(gdb) break 15 (gdb) run ... (gdb) set var idx = 4 (gdb) step

NOTE: If you do not get aby error but just strange results, try re-compiling your software with the flag -fsanitize=address -fsanitize=leak (only works recent for gnu compilers) and then you will catch the errors at running time.

7.3.6. Interfaces (graphical)

- Tui mode

gdb -tui mode: Ncurses gui

- Inside emacs

When having a file openned in emacs, press

M-xand write gdb, press enter. You will be presented with an example command to run gdb, modify it as you wish. Then, emacs will be yor debugger, and you can move from frame to frame by using the cursor orC-x o. - gdbgui

- gdbfrontend

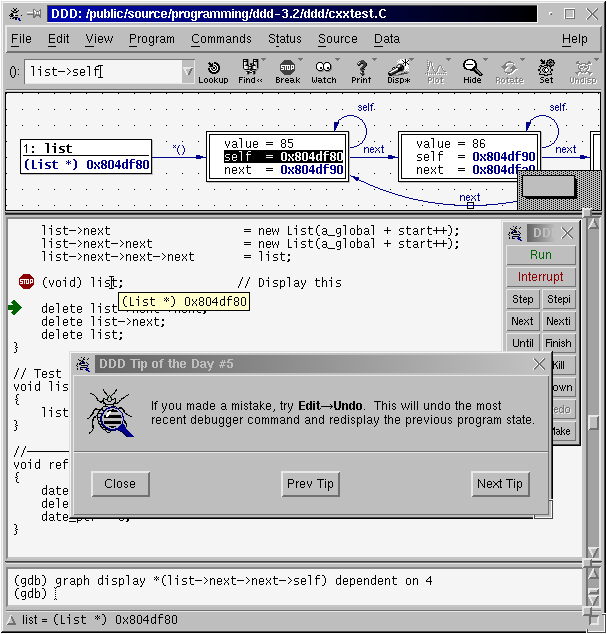

- DDD : Data Display Debugger

Although dated, its great strenght is the ability to plot data with the command

grap plot dataarrayname, and check its evilution as the you debug. - Others

Codeblocks, geany, clion, etc all them have interfaces for gdb.

- Online gdb

7.3.7. Another exercise

Please try to help the poor code called please_fixme.cpp. Share your

experiences. How many bugs did you find?

#Poor man’s profiler#

- Get the process id:

$ top

or

$ ps aux

- Attach the process to gdb (

PIDis the process id):

$ gdb -p PID

Now the program is paused.

- check the stack:

(gdb) thread apply all bt

Check what appears first.

- Continue the execution:

(gdb) continue

- Repeat previous two steps as many times as you want and make a histogram of the most executed functions.

7.3.8. Debugging within emacs

Please check emacs gdb mode

- Open your source code with emacs.

- Compile with

M-x compile.Mis the meta function. By default it uses make, but you can modify the compile command. - Activate de debugging:

M-x gdb - Debug. To move from one frame to another you can use either the mouse

or the shortcut

ctrl-x o. To close a pane, usectrl-x 0when inside that pane.

7.3.9. Modern tools

- Python

Gdb version >= 7.x supports python. You can use to explore more info about your program, pretty print data, and even plot (although blocking).

To load a c++ var into a python do, inside gdb,

py my_array = gdb.parse_and_eval("my_array") py print my_array.type

Even more, to plot some data structure with matplotlib, you can as follows (Based on https://blog.semicolonsoftware.de/debugging-numerical-c-c-fortran-code-with-gdb-and-numpy/)

1: int main(int argc, char **argv) { 2: const int nrow = 4; 3: const int ncol = 3; 4: double x[nrow * ncol]; 5: for(int i = 0; i < nrow; i++) { 6: for(int j = 0; j < ncol; j++) { 7: x[i * ncol + j] = i * j; 8: } 9: } 10: // BREAK here 11: }

Follow the previous url to install gdbnumpy.py.

Compile as

gcc ex-01.c -g -ggdb

and now, run

gdband put the following commandsbr 10 # sets a breakpoint run # to load the program symbols py import gdb_numpy py x = gdb_numpy.to_array("(double *)x", shape=(4 * 3,)).reshape(4, 3) py print x py import matplotlib.pyplot as plt py plt.imshow(x) py plt.show()

Use gdb to execute, step by step, the following code

#include <iostream> #include <cstdlib> int main(int argc, char **argv) { int a; a = 0; std::cout << a << std::endl; a = 5; return EXIT_SUCCESS; }

7.3.10. Examples to debug

Example 1

#include <iostream> int main(void) { //double x[10], y[5]; // imprime raro double y[5], x[10]; // imprime 1 for(int ii = 0; ii < 5; ++ii) { y[ii] = ii +1; } for(int ii = 0; ii < 10; ++ii) { x[ii] = -(ii +1); } double z = x[10]; std::cout << z << std::endl; return 0; }

7.4. DDD : Data Display Debugger

The Data Display Debugger - ddd is a graphical frontend for command line debuggers like gdb, dbx, jdb, bashdb, etc, and some modified python debugger. It easies the debug processing by adding some capabilities, like visual monitoring of data and data plotting (killer feature).

NOTE: DDD is hard to configure, it is so rebel. If after configuring some options you are having issues, like dd hanging with a message “Waiting for gdb to start”, or the plotting window is always waiting, then close ddd, delete the config file with

rm -rf ~/.ddd, and start over by configuring ONLY one option at a time (configure one option, then close it, then open, then configure other option, then close, etc).

7.4.1. Exercise

Repeat the previous debugging exercises by using ddd. In particular, use

the display and watch. Do you find them convenient? and what about

graph display data?

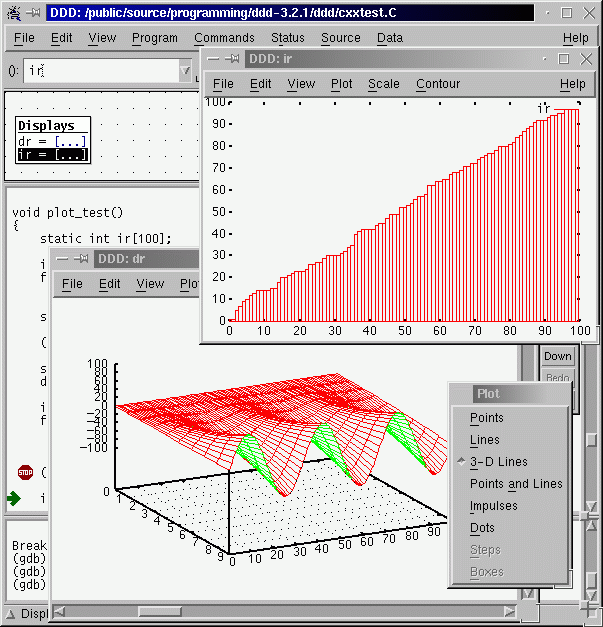

7.4.2. DDD data plotting with gnuplot

One of the killer features of ddd is its capability to actually plot the data with gnuplot. Let’s do an example:

- Compile (with debugging activated, of course) the code

array.cpp. - Now open the executable with ddd.

- Establish a break point at main.

- Run the executable under ddd.

- Now write

graph plot data, or equivalently, you can selectdatawith the mouse and then click to plot on the menu bar. NOTE : If you are having issues please try to configure gnuplot helper to be on an external window, then close, then re-open, and try again. Check also Notes above. - Now proceed by steps. You will be able to see a plot of the array

dataas it changes its values. Awesome, isn’t it? - Do the same for the 2d array. What will you expect now to be plotted? a line or a surface?

7.5. TODO Examples to solve

Let’s use ddd to explore some other exercises.

- ICTP examples

Browse to ICTP-examples, then go to debugging, then ICTP-gdbexamples , and download each file, or just download all files compressed at example files

- Other

These are more examples: original link

7.6. Valgrind

Figure 1: Valgrind Logo

From Web Page: > Valgrind is an instrumentation framework for building dynamic analysis tools. There are Valgrind tools that can automatically detect many memory management and threading bugs, and profile your programs in detail. You can also use Valgrind to build new tools.

The Valgrind distribution currently includes six production-quality tools: a memory error detector, two thread error detectors, a cache and branch-prediction profiler, a call-graph generating cache and branch-prediction profiler, and a heap profiler. It also includes three experimental tools: a heap/stack/global array overrun detector, a second heap profiler that examines how heap blocks are used, and a SimPoint basic block vector generator. It runs on the following platforms: X86/Linux, AMD64/Linux, ARM/Linux, PPC32/Linux, PPC64/Linux, S390X/Linux, MIPS/Linux, ARM/Android (2.3.x and later), X86/Android (4.0 and later), X86/Darwin and AMD64/Darwin (Mac OS X 10.6 and 10.7, with limited support for 10.8).

7.6.1. Graphical Interfaces

7.6.2. As memory checker

In this section we will focus on valgrind as memory checker tool (debugging part). Therefore, we will invoke valgrind with that option:

$ valgrind --tool=memcheck --leak-check=yes myprog arg1 arg2

where myprog is your executable’s name, and arg1 etc are the

arguments.

We will use the files inside valgrind/Valgrind-QuickStart

Please compile the file a.c (which is also on the compressed file -

check wiki main page) and run valgrind on it. What do you obtain? Do you

have memory leaks? Please fix it until you have no memory errors.

Do the same for b.c .

- Example : Memory leak

#include <iostream> #include <cstdlib> void getmem(double *ptr, int n); int main(int argc, char **argv) { // declare variables const int N = 10000; double * array; for (int ii = 0 ; ii < 20; ++ii) { getmem(array, N); } // free memory // delete [] array; return EXIT_SUCCESS; } void getmem(double *ptr, int n) { ptr = new double [n]; data[0] = data[1]*2; }

- More examples : ICTP

https://github.com/thehackerwithin/PyTrieste/tree/master/valgrind

Please enter the

SoftwareCarpentry-ICTPvalgrind subfolder and locate the source files. CompilesimpleTest.ccas$ g++ -g simpleTest.cc -o simpleTest

Now run valgrind on it

$ valgrind --track-origins=yes --leak-check=full ./simpleTest 300 300

Check how the memory leaks depend on the parameters. Fix the code.

7.7. TODO Homework

Modularize one of the previous codes into a header a implementation file and a main file. This will help when writing tests.

8. Unit Testing : Ensuring fix and correct behavior last

Unity testing allows to ensure that a given software behaves in the correct way, at least for the cases one is testing. Once a function is written (or even before in TTD) or a bug is fixed, it is necessary to write a test that ensures the function to work properly in limit cases or the bug to not reappear in the future. There are several levels associated with unit testing .

In this unit we will learn the general philosophy behind it and a couple of tools to implement very basic tests, althoguh the list of testing frameworks is very large. Furthermore, modularization will be very important, so you must have a clear understanding on how to split some given code into headers, source files, and how to compile objects and then link them using the linker, hopefully through a Makefile.

8.1. Catch2

Our goal here is to learn to use catch2 to test a very simple function extracted

from their tutorial. Later we will modularize the code to practice that and

write a useful Makefile.

8.1.1. Install catch2

We will install catch2 using spack:

spack install catch2

8.1.2. Tutorial example

This is the file example extracted from catch2 tutorial.

#define CATCH_CONFIG_MAIN // This tells Catch to provide a main() - only do this in one cpp file #include "catch2/catch.hpp" //#include "catch.hpp" unsigned int Factorial( unsigned int number ) { return number <= 1 ? number : Factorial(number-1)*number; } TEST_CASE( "Factorials are computed", "[factorial]" ) { REQUIRE( Factorial(1) == 1 ); REQUIRE( Factorial(2) == 2 ); REQUIRE( Factorial(3) == 6 ); REQUIRE( Factorial(10) == 3628800 ); }

Catch is header only. Compile this as

g++ example_test.cpp

then run the executable and the tests info will be presented on the screen.

Can you see if there is a bug in the factorial code? fix it and add

a test REQUIRE in the same file.

8.1.3. Code modularization

Modularization allows us to split the implementation, the interface, and the test.

To modularize the code we need to take the only function here, factorial, and

split it into a header and source file:

header file:

#pragma once int factorial(int n);

Implementation file:

#include "factorial.h" int factorial(int number) { return number <= 1 ? number : factorial(number-1)*number; }

Clearly this factorial.cpp file cannot be compiled into and

executable since it does no contain a main function. But it can be

used to construct and object file (flag -c on the compiler) to

later link with other object files.

Now we create a main file that will call the factorial function:

#include <iostream> #include "factorial.h" int main(void) { std::cout << factorial(4) << std::endl; return 0; }

And then compile it as

g++ -c factorial.cpp # creates factorial.o that can be used for the testing g++ -c factorial_main.cpp # creates main_factorial.o g++ factorial.o factorial_main.o -o main_factorial.x # links and creates executable ./main_factorial.x

8.1.4. Writing the test

Let’s first write the test

#define CATCH_CONFIG_MAIN // This tells Catch to provide a main() - only do this in one cpp file #include "catch2/catch.hpp" #include "factorial.h" TEST_CASE( "Factorials are computed", "[factorial]" ) { //REQUIRE( factorial(0) == 1 ); REQUIRE( factorial(1) == 1 ); REQUIRE( factorial(2) == 2 ); REQUIRE( factorial(3) == 6 ); REQUIRE( factorial(10) == 3628800 ); }

Now let’s run the test using the modularized code. First, let’s load catch

spack load catch2

Compile and execute as

g++ -c factorial.cpp g++ -c factorial_test.cpp g++ factorial.o factorial_test.o -o factorial_test.x ./factorial_test.x

Now you can test or run-with-not-tests independently.

8.1.5. Makefile

To compile this and future tests, is useful to implement all in a Makefile

SHELL:=/bin/bash all: factorial_main.x test: factorial_test.x ./$< %.x: %.o factorial.o source $$HOME/repos/spack/share/spack/setup-env.sh; \ spack load catch2; \ g++ $$(pkg-config --cflags catch2) $^ -o $@ %.o: %.cpp source $$HOME/repos/spack/share/spack/setup-env.sh; \ spack load catch2; \ g++ $$(pkg-config --cflags catch2) -c $< clean: rm -f *.o *.x

8.2. google test

Google test is a famous and advance unit framework that goes well beyond of what is shown here. You are invited to follow the docs to learn more.

8.2.1. Installation

Again, we will use spack

spack install googletest

mkdir googletest

8.2.2. Example

This is an example, already modularized.

- Factorial and isprime header:

#ifndef GTEST_SAMPLES_SAMPLE1_H_ #define GTEST_SAMPLES_SAMPLE1_H_ // Returns n! (the factorial of n). For negative n, n! is defined to be 1. int Factorial(int n); //// Returns true if and only if n is a prime number. bool IsPrime(int n); #endif // GTEST_SAMPLES_SAMPLE1_H_

- Source file

#include "factorial.h" // Returns n! (the factorial of n). For negative n, n! is defined to be 1. int Factorial(int n) { int result = 1; for (int i = 1; i <= n; i++) { result *= i; } return result; } // Returns true if and only if n is a prime number. bool IsPrime(int n) { // Trivial case 1: small numbers if (n <= 1) return false; // Trivial case 2: even numbers if (n % 2 == 0) return n == 2; // Now, we have that n is odd and n >= 3. // Try to divide n by every odd number i, starting from 3 for (int i = 3; ; i += 2) { // We only have to try i up to the square root of n if (i > n/i) break; // Now, we have i <= n/i < n. // If n is divisible by i, n is not prime. if (n % i == 0) return false; } // n has no integer factor in the range (1, n), and thus is prime. return true; }

- Test source file (to be compiled as an object)

#include <limits.h> #include "factorial.h" #include "gtest/gtest.h" namespace { // Tests factorial of negative numbers. TEST(FactorialTest, Negative) { // This test is named "Negative", and belongs to the "FactorialTest" // test case. EXPECT_EQ(1, Factorial(-5)); EXPECT_EQ(1, Factorial(-1)); EXPECT_GT(Factorial(-10), 0); } // Tests factorial of 0. TEST(FactorialTest, Zero) { EXPECT_EQ(1, Factorial(0)); } // Tests factorial of positive numbers. TEST(FactorialTest, Positive) { EXPECT_EQ(1, Factorial(1)); EXPECT_EQ(2, Factorial(2)); EXPECT_EQ(6, Factorial(3)); EXPECT_EQ(40320, Factorial(8)); } // Tests negative input. TEST(IsPrimeTest, Negative) { // This test belongs to the IsPrimeTest test case. EXPECT_FALSE(IsPrime(-1)); EXPECT_FALSE(IsPrime(-2)); EXPECT_FALSE(IsPrime(INT_MIN)); } // Tests some trivial cases. TEST(IsPrimeTest, Trivial) { EXPECT_FALSE(IsPrime(0)); EXPECT_FALSE(IsPrime(1)); EXPECT_TRUE(IsPrime(2)); EXPECT_TRUE(IsPrime(3)); } // Tests positive input. TEST(IsPrimeTest, Positive) { EXPECT_FALSE(IsPrime(4)); EXPECT_TRUE(IsPrime(5)); EXPECT_FALSE(IsPrime(6)); EXPECT_TRUE(IsPrime(23)); } }

- Main google test file

#include <cstdio> #include "gtest/gtest.h" GTEST_API_ int main(int argc, char **argv) { printf("Running main() from %s\n", __FILE__); testing::InitGoogleTest(&argc, argv); return RUN_ALL_TESTS(); }

9. Profiling

After debugging your code and writing several tests to avoid repeating the same bug, you want to start optimizing it to make it fast (but keeping it correct). To do this, you need to measure. You need to detect functions which take most of the time. Optimizing a function that takes only 5% of the time will give you only marginal benefits. Finding the functions that take most of the time is called profiling , and there are several tools ready to help you. In the following we ill learn to use these tools to detect the hotspots/bottlenecks in our codes.

9.1. Why profile?

Profiling allows you to learn how the computation time was spent and how the data flows in your program. This can be achieved by just printing the time spent on each section using some internal timers, or, better, by using a tool called “profiler” that shows you how time was spent in your program and which functions called which other functions.

Using a profiler is something that comes very handy when you want to verify that your program does what you want it to do, especially when it is not easy to analyze (it may contain many functions or many calls to different functions and so on).

Remember, WE ARE SCIENTISTS, so we want to profile code to optimize the computation time taken by our program. The key idea then becomes finding where (which function/subroutine) is the computation time spent and attack it using optimization techniques (studied in a previous session of this course, check this).

9.2. Measuring elapsed time

The first approach is to just add timers to your code. This is a good practice and it is useful for a code to report the time spent on different parts. The following example is extracted from here and modified to make it a bit simpler:

#include <cstdio> #include <cstdlib> /* Tests cache misses. */ int main(int argc, char **argv) { if (argc < 3){ printf("Usage: cacheTest sizeI sizeJ\nIn first input.\n"); return 1; } long sI = atoi(argv[1]); long sJ = atoi(argv[2]); printf("Operating on matrix of size %ld by %ld\n", sI, sJ); long *arr = new long[sI*sJ]; // double array. // option 1 for (long i=0; i < sI; ++i) for (long j=0; j < sJ; ++j) arr[(i * (sJ)) + j ] = i; // option 2 for (long i=0; i < sI; ++i) for (long j=0; j < sJ; ++j) arr[(j * (sI)) + i ] = i; // option 3 for (int i=0; i < sI*sJ; ++i) arr[i] = i; printf("%ld\n", arr[0]); return 0; }

Now let’s add some timers using the chrono c++11 library (you can see more

eamples here and here .

#include <cstdio> #include <cstdlib> #include <chrono> #include <iostream> /* Tests cache misses. */ template <typename T> void print_elapsed(T start, T end ); int main(int argc, char **argv) { if (argc < 3){ printf("Usage: cacheTest sizeI sizeJ\nIn first input.\n"); return 1; } long sI = atoi(argv[1]); long sJ = atoi(argv[2]); printf("Operating on matrix of size %ld by %ld\n", sI, sJ); auto start = std::chrono::steady_clock::now(); long *arr = new long[sI*sJ]; // double array. auto end = std::chrono::steady_clock::now(); print_elapsed(start, end); // option 1 start = std::chrono::steady_clock::now(); for (long i=0; i < sI; ++i) for (long j=0; j < sJ; ++j) arr[(i * (sJ)) + j ] = i; end = std::chrono::steady_clock::now(); print_elapsed(start, end); // option 2 start = std::chrono::steady_clock::now(); for (long i=0; i < sI; ++i) for (long j=0; j < sJ; ++j) arr[(j * (sI)) + i ] = i; end = std::chrono::steady_clock::now(); print_elapsed(start, end); // option 3 start = std::chrono::steady_clock::now(); for (int i=0; i < sI*sJ; ++i) arr[i] = i; end = std::chrono::steady_clock::now(); print_elapsed(start, end); printf("%ld\n", arr[0]); return 0; } template <typename T> void print_elapsed(T start, T end ) { std::cout << "Elapsed time in ms: " << std::chrono::duration_cast<std::chrono::milliseconds>(end-start).count() << "\n"; }

Analyze the results.

9.3. Profilers

There are many types of profilers from many different sources, commonly, a profiler is associated to a compiler, so that for example, GNU (the community around gcc compiler) has the profiler ’gprof’, intel (corporation behind icc) has iprof, PGI has pgprof, etc. Valgrind is also a useful profiler through the cachegrind tool, which has been shown at the debugging section.

This crash course (more like mini tutorial) will focus on using gprof. Note that gprof supports (to some extend) compiled code by other compilers such as icc and pgcc. At the end we will briefly review perf, and the google performance tools.

FACT 1: According to Thiel, “gprof … revolutionized the performance analysis field and quickly became the tool of choice for developers around the world …, the tool is still actively maintained and remains relevant in the modern world.” (from Wikipedia).

FACT 2: Top 50 most influential papers on PLDI (from Wikipedia).

9.4. gprof

Inthe following we will use the following code as example:

Example 1: Basic gprof (compile with gcc)

//test_gprof.c #include<stdio.h> void new_func1(void); void func1(void); static void func2(void); void new_func1(void); int main(void) { printf("\n Inside main()\n"); int i = 0; for(;i<0xffffff;i++); func1(); func2(); return 0; } void new_func1(void); void func1(void) { printf("\n Inside func1 \n"); int i = 0; for(;i<0xffffffff;i++); new_func1(); return; } static void func2(void) { printf("\n Inside func2 \n"); int i = 0; for(;i<0xffffffaa;i++); return; } void new_func1(void) { printf("\n Inside new_func1()\n"); int i = 0; for(;i<0xffffffee;i++); return; }

Para usar gprof:

Compilar con

-pggcc -Wall -pg -g test_gprof.c -o test_gprof

Ejecutar normalmente. Esto crea o sobreescribe un archivo

gmon.out./test_gprof

Abrir el reporte usando

gprof test_gprof gmon.out > analysis.txt

This produces a file called ’analysis.txt’ which contains the profiling information in a human-readable form. The output of this file should be something like the following:

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls s/call s/call name

39.64 9.43 9.43 1 9.43 16.79 func1

30.89 16.79 7.35 1 7.35 7.35 new_func1

30.46 24.04 7.25 1 7.25 7.25 func2

0.13 24.07 0.03 main

% the percentage of the total running time of the

time program used by this function.

cumulative a running sum of the number of seconds accounted

seconds for by this function and those listed above it.

self the number of seconds accounted for by this

seconds function alone. This is the major sort for this

listing.

calls the number of times this function was invoked, if

this function is profiled, else blank.

self the average number of milliseconds spent in this

ms/call function per call, if this function is profiled,

else blank.

total the average number of milliseconds spent in this

ms/call function and its descendents per call, if this

function is profiled, else blank.

name the name of the function. This is the minor sort

for this listing. The index shows the location of

the function in the gprof listing. If the index is

in parenthesis it shows where it would appear in

the gprof listing if it were to be printed.

Call graph (explanation follows)

granularity: each sample hit covers 2 byte(s) for 0.04% of 24.07 seconds

index % time self children called name

<spontaneous>

[1] 100.0 0.03 24.04 main [1]

9.43 7.35 1/1 func1 [2]

7.25 0.00 1/1 func2 [4]

-----------------------------------------------

9.43 7.35 1/1 main [1]

[2] 69.7 9.43 7.35 1 func1 [2]

7.35 0.00 1/1 new_func1 [3]

-----------------------------------------------

7.35 0.00 1/1 func1 [2]

[3] 30.5 7.35 0.00 1 new_func1 [3]

-----------------------------------------------

7.25 0.00 1/1 main [1]

[4] 30.1 7.25 0.00 1 func2 [4]

-----------------------------------------------

This table describes the call tree of the program, and was sorted by

the total amount of time spent in each function and its children.

Each entry in this table consists of several lines. The line with the

index number at the left hand margin lists the current function.

The lines above it list the functions that called this function,

and the lines below it list the functions this one called.

This line lists:

index A unique number given to each element of the table.

Index numbers are sorted numerically.

The index number is printed next to every function name so

it is easier to look up where the function is in the table.

% time This is the percentage of the `total' time that was spent

in this function and its children. Note that due to

different viewpoints, functions excluded by options, etc,

these numbers will NOT add up to 100%.

self This is the total amount of time spent in this function.

children This is the total amount of time propagated into this

function by its children.

called This is the number of times the function was called.

If the function called itself recursively, the number

only includes non-recursive calls, and is followed by

a `+' and the number of recursive calls.

name The name of the current function. The index number is

printed after it. If the function is a member of a

cycle, the cycle number is printed between the

function's name and the index number.

For the function's parents, the fields have the following meanings:

self This is the amount of time that was propagated directly

from the function into this parent.

children This is the amount of time that was propagated from

the function's children into this parent.

called This is the number of times this parent called the

function `/' the total number of times the function

was called. Recursive calls to the function are not

included in the number after the `/'.

name This is the name of the parent. The parent's index

number is printed after it. If the parent is a

member of a cycle, the cycle number is printed between

the name and the index number.

If the parents of the function cannot be determined, the word

`<spontaneous>' is printed in the `name' field, and all the other

fields are blank.

For the function's children, the fields have the following meanings:

self This is the amount of time that was propagated directly

from the child into the function.

children This is the amount of time that was propagated from the

child's children to the function.

called This is the number of times the function called

this child `/' the total number of times the child

was called. Recursive calls by the child are not

listed in the number after the `/'.

name This is the name of the child. The child's index

number is printed after it. If the child is a

member of a cycle, the cycle number is printed

between the name and the index number.

If there are any cycles (circles) in the call graph, there is an

entry for the cycle-as-a-whole. This entry shows who called the

cycle (as parents) and the members of the cycle (as children.)

The `+' recursive calls entry shows the number of function calls that

were internal to the cycle, and the calls entry for each member shows,

for that member, how many times it was called from other members of

the cycle.

Index by function name

[2] func1 [1] main

[4] func2 [3] new_func1

The output has two sections: >*Flat profile:* The flat profile shows the total amount of time your program spent executing each function.

Call graph: The call graph shows how much time was spent in each function and its children.

9.5. perf

9.5.1. Installing perf

Perf is a kernel module, so you will need to install it from the kernel source. As root, the command used to install perl in the computer room was

cd /usr/src/linux/tools/perf/; make -j $(nproc); cp perf /usr/local/bin

This will copy the perf executable into the path.

9.5.2. Using perf

Perf is a hardware counter available on linux platforms.

Its use is very simple: Just run, For a profile summary,

perf stat ./a.out > profile_summary

For gprof-like info, use

perf record ./a.out perf report

9.5.3. Hotstop: Gui for perf

https://github.com/KDAB/hotspot Install with the appimage: Download it, make it executable, run.

In the computer room you can also use spack:

spack load hotspot-perf

And then just use the command hotspot .

9.6. Profiling with valgrind: cachegrind and callgrind

Valgrind allows not only to debug a code but also to profile it. Here we will see how to use cachegrind, to check for cache misses, and callgrind, for a calling graph much like tools like perf and gprof.

9.6.1. Cache checker : cachegrind

From cachegrind

Cachegrind simulates how your program interacts with a machine'scache hierarchy and (optionally) branch predictor. It simulates a machine with independent first-level instruction and data caches (I1 and D1), backed by a unified second-level cache (L2). This exactly matches the configuration of many modern machines.

However, some modern machines have three levels of cache. For these machines (in the cases where Cachegrind can auto-detect the cache configuration) Cachegrind simulates the first-level and third-level caches. The reason for this choice is that the L3 cache has the most influence on runtime, as it masks accesses to main memory. Furthermore, the L1 caches often have low associativity, so simulating them can detect cases where the code interacts badly with this cache (eg. traversing a matrix column-wise with the row length being a power of 2).

Therefore, Cachegrind always refers to the I1, D1 and LL (last-level) caches.

To use cachegrind, you will need to invoke valgrind as

valgrind --tool=cachegrind prog

Take into account that execution will be (possibly very) slow.

Typical output:

==31751== I refs: 27,742,716 ==31751== I1 misses: 276 ==31751== LLi misses: 275 ==31751== I1 miss rate: 0.0% ==31751== LLi miss rate: 0.0% ==31751== ==31751== D refs: 15,430,290 (10,955,517 rd + 4,474,773 wr) ==31751== D1 misses: 41,185 ( 21,905 rd + 19,280 wr) ==31751== LLd misses: 23,085 ( 3,987 rd + 19,098 wr) ==31751== D1 miss rate: 0.2% ( 0.1% + 0.4%) ==31751== LLd miss rate: 0.1% ( 0.0% + 0.4%) ==31751== ==31751== LL misses: 23,360 ( 4,262 rd + 19,098 wr) ==31751== LL miss rate: 0.0% ( 0.0% + 0.4%)

The output and more info will be written to cachegrind.out.<pid>,

where pid is the PID of the process. You can open that file with

valkyrie for better analysis.

The tool cg_annonate allows you postprocess better the file

cachegrind.out.<pid>.

Compile the file cacheTest.cc,

$ g++ -g cacheTest.cc -o cacheTest

Now run valgrind on it, with cache checker

$ valgrind --tool=cachegrind ./cacheTest 0 1000 100000

Now let’s check the cache-misses per line of source code:

cg_annotate --auto=yes cachegrind.out.PID

where you have to change PID by the actual PID in your results.

Fix the code.

- More cache examples

Please open the file

cache.cppwhich is inside the directory valgrind. Read it. Comment the linestd::sort(data, data + arraySize);

Compile the program and run it, measuring the execution time (if you wish, you can use optimization):

$ g++ -g cache.cpp -o cache.x $ time ./cache.x

The output will be something like

26.6758 sum = 312426300000 real 0m32.272s user 0m26.560s sys 0m0.122s

Now uncomment the same line, re-compile and re-run. You will get something like

5.37881 sum = 312426300000 real 0m6.180s user 0m5.360s sys 0m0.026s